Opinion: Wait times go down. Patient satisfaction goes up. What’s the matter with letting apps and AI run the ER?

My resident describes our next emergency room patient — a 32-year-old female with severe, crampy mid-abdominal pain, vomiting and occasional loose stools. The symptoms have been present for nearly a week, and there is tenderness to both sides of the upper abdomen. It could be a gallbladder problem, the resident says, hepatitis, pancreatitis, diverticulitis or an atypical appendicitis. She proposes routine blood tests along with an ultrasound and an abdominal CT scan.

This is the time-honored approach to an undifferentiated patient complaint: Generate a list of possible diagnoses, decide which represent a “reasonable” concern and use the results from further testing to conclude what’s going on. Yet increasingly the second phase of this process — evaluating which diagnoses represent a reasonable concern — is getting short shrift. It is the heavy lift of any patient encounter — weighing disease probabilities, probing for details. It’s often simpler, and faster, to cast a wide net, click the standard order for blood work and imaging, and wait for the results to pop up.

The issue of the “busy doctor ordering too many tests” has plagued medicine for decades. Now, as hospitals inject algorithms and technology into their workflow, it’s much worse. Medicine is moving inexorably away from the deductive arts, becoming more technology- and test-dependent and less patient-centric.

Go to an emergency room today and you will likely be met within minutes by a doctor whose sole role is to perform a “rapid medical evaluation.” The provider asks a few questions, ticks boxes on a computer screen and, shazam, you are in line for the most likely series of tests and scans, all based on typically a less than 60-second encounter.

This strategy seems obvious. When workups are initiated as soon as the patient arrives, wait times go down, patient satisfaction goes up, and fewer patients leave out of frustration before even being seen. These are the metrics that put smiles on administrators’ faces and give hospitals high marks in national surveys.

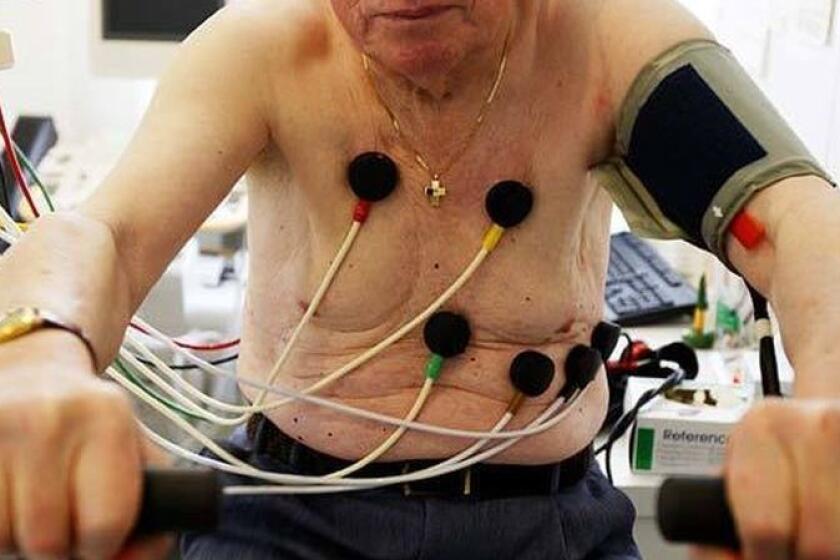

But is it good doctoring? Without the luxury of time, these gateway providers typically lump patients into broad, generic categories: the middle-aged person with chest pain, the short-of-breath asthmatic, the vomiting pregnant patient, the septuagenarian with cough and fever, and so forth. The diagnosis is then reverse-engineered with tests to cover all possible bases for that particular complaint.

In tennis parlance, I had my friend Stewart on a yo-yo, pushing the ball from side to side, front to back, forcing him into ever more desperate, lung-searing wind sprints just to stay in the point.

In essence this is flipping the script on traditional doctoring while incentivizing doctors to use testing as a surrogate for critical thinking, dumbing down the practice of medicine and throwing gasoline on the problem of over-testing.

Since rapid evaluation became the norm, use of laboratory, CT and ultrasound services at my hospital has increased nearly 20%. Just the other day, a pregnant woman in my ER went through a full battery of time-consuming, expensive and invasive tests even though she’d been through all of them at another hospital the day before. As far as I can tell, the only reason we did that was because that’s what an algorithm told us to do.

This has real effects on patients. Contrary to popular perception, more tests may not supply more answers. That’s because the accuracy of any test depends on the likelihood that the patient has the disease in question before the test is performed. Testing performed without the appropriate indication or context can produce incidental or even spurious results that may have your doctor looking in entirely the wrong direction.

This alarming trend, which began before COVID, fuels a deadly cycle of a sick workforce and weakened economy.

The basic problem with hospitals’ growing obsession with efficiency is this: Algorithmic systems treat all patients the same, expecting precise, like-for-like responses to every question with just the right amount of detail. Except every patient is unique. And they tend to give up their stories at their own pace, in broken, non-linear fits and starts, sometimes conflating truth and fiction in ways that can be counterproductive and frustrating, but also uniquely human. I am often reminded of Jack Webb in the old TV series “Dragnet” imploring a witness to offer “just the facts, ma’am, just the facts.” In real life, whether from situational stress, self-delusion, superstition, health illiteracy, mental illness, drugs or alcohol, my patients’ initial version of their complaint is rarely “just the facts” or the final word on the subject.

A colleague recently described her role in a clinical encounter as 9 parts translator to 1 part doctor. One question leads to another, and then another, and another until she successfully translates the patient’s lived experience into a language modern medicine and its algorithms might begin to understand. My experience is similar. Properly choreographed, the doctor-patient interaction becomes a pas de deux — two people in sync, jointly trying to solve a puzzle with each sharing their perspective and expertise. In the transition to front-loaded care, I worry health decisions will be made with information that may be incomplete or, at times, totally unreliable.

When Alex Trebek, the longtime “Jeopardy” host, revealed to the world that he’d been diagnosed with Stage 4 pancreatic cancer this spring, his statement echoed the words of many patients I’ve treated.

Algorithmic medicine also seems tailor-made for an AI takeover. The logic is obvious. Use “big data” to assist doctors and nurses struggling to keep up with the demands of modern medicine. AI can ensure a level, consistent floor of care that avoids errors of omission by considering a deliberately broad list of diagnostic possibilities. In an ideal world, a synergy of human and machine intelligence could amplify the patient-doctor encounter. As likely, AI will lead doctors to abdicate judgment and responsibility to the automated response of the machine.

And so, I complimented my resident on her list of concerns but suggested that we spend a little more time with the patient. The story of her symptoms didn’t feel complete. I recommended my resident grab a chair and simply ask the patient about her life. What emerged was the chaotic picture of an exhausted part-time student by day, working two evening waitressing jobs and surviving on pizza, pasta and energy drinks. She had always had a “fragile stomach.”

Our list of reasonable diagnoses was expanding and contracting, replaced with irritable bowel syndrome, food intolerances, gut motility issues, all overlying a stressed individual barely keeping it together. The labs, ultrasound or CT scan initially proposed now seemed irrelevant.

Emergency medical services in California and across the U.S. have grown rapidly to fill gaps in nonemergency care. It’s expensive, ineffective and unsustainable.

The result: The patient got out of the hospital faster. She received helpful suggestions about stress reduction, diet and sleep habits. She got an appointment with a primary care physician and avoided thousands of dollars in tests. Had we just relied on tests instead of asking a few more questions, there is a good chance we would have missed the best approach to her problem entirely.

ER waiting rooms and wards are bursting at the seams, and the streamlining of care has never felt more essential. But this is not an excuse for doctors to relinquish their humanity or their “method.” We should tweak the process: Allow more time for doctors to get the story right, do less testing until we have weighed the risks and rewards, prioritize asking questions rather than merely looking for answers.

Sociologists coined the term “pre-automation” to describe the transitional phase in which humans lay the groundwork for automation, often by acting in increasingly machine-like ways. As providers, we must not fall in line.

Put another way, with AI primed to take on a substantial role in how doctors deliver care, we should remind ourselves: If we behave like machines, we certainly won’t be missed when machines replace us.

Eric Snoey is an ER doctor at Alameda Health System-Highland Hospital in Oakland.

More to Read

A cure for the common opinion

Get thought-provoking perspectives with our weekly newsletter.

You may occasionally receive promotional content from the Los Angeles Times.